Out-of-distribution sky color and image segmentation

Recently I was talking to a friend and they mentioned that they think a deep neural network trained on a distribution of natural images will not be able to handle an image with an unnatural green sky. I took a photo of a parking lot at Stanford, a poster for the movie The Martian and several scenes with unusual sky colors from the sci-fi series the Expanse, cropped out their skies, recolored them blue, green, yellow, red and purple, and verified that Mask RCNN has no trouble segmenting out people and cars in them despite their very out-of-distribution sky colors. This shows that large vision systems are quite robust to such semantic dataset shift, and that they will not immediately get confused if they see e.g. an unusual green sky.

Stanislav Fort (Twitter, Scholar and GitHub)

| Stanford University Graduate Residences | A poster for the movie The Martian |

|---|---|

|

|

TL;DR: I took a photo of a parking lot at Stanford, a poster for the movie The Martian and several scenes with unusual sky colors from the sci-fi series the Expanse, cropped out their skies, recolored them blue, green, yellow, red and purple, and verified that Mask RCNN has no trouble segmenting out people and cars in them despite their very out-of-distribution sky colors. This shows that large vision systems are quite robust to such semantic dataset shift, and that they will not immediately get confused if they see e.g. an unusual green sky.

Introduction

Recently I was talking to a friend and they mentioned that they think a deep neural network trained on a distribution of natural images will not be able to handle an image with an unnatural green sky. My intuition was that the network would likely work just fine, and to check that I ran a series of experiments on some of my own photos as well as photos from movies. I used a pre-trained image segmentation Mask RCNN Google Colab and the associated Google Cloud tutorial to run my experiments.

To get the appropriate photos, I first looked for science fiction images with unusual sky colors that would also have humans (possibly in space suites) and cars/vehicles in them. This proved to be more difficult than I thought. I managed to get photos of Mars from the movie The Martian and the sci-fi series the Expanse (with orange skies) and a photo of Ganymede with a black sky. To make the problem more challenging, as Mars could have plausibly been in the dataset, I took the poster for the movie The Martian, cropped out the astronaut, and recolored the background by changing its hue, contrast, and brightness in GIMP. For a more realistic image, I took a photo of the Stanford University Graduate Residences, cropped out the sky, and changed its color in a similar way. In the Stanford photo, the objects to be detected were cars, while the other photos had astronauts/people in them.

The Stanford University Graduate Residences

The first experiment I performed was on a picture I took in March 2021 of the Stanford University Graduate Residences and their parking lot. The sky at Stanford was clear blue, and the parking lot nearby served as a good source of cars to segment. I erased the car registration numbers for privacy reasons. Here’s the original image and its associated segmentation:

I cropped out the sky by hand and changed its color to green, purple and yellow, as shown below:

The color of the sky had no discernible effect on the segmentation of the cars in the foreground. The network didn’t get confused by the out-of-distribution sky color at all.

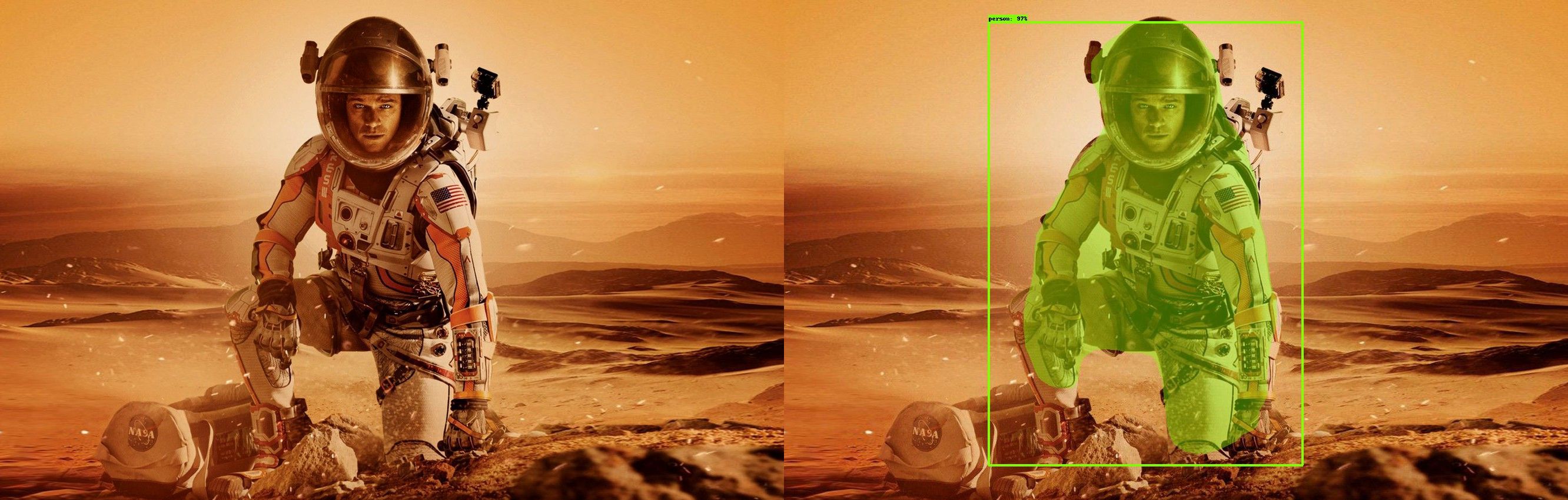

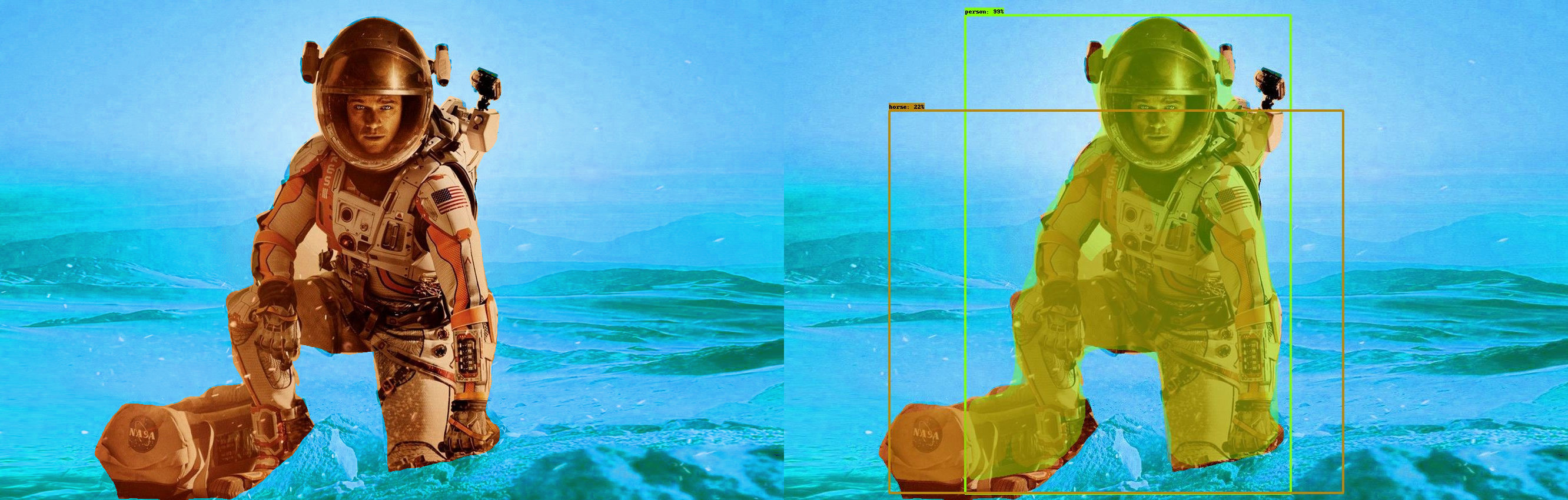

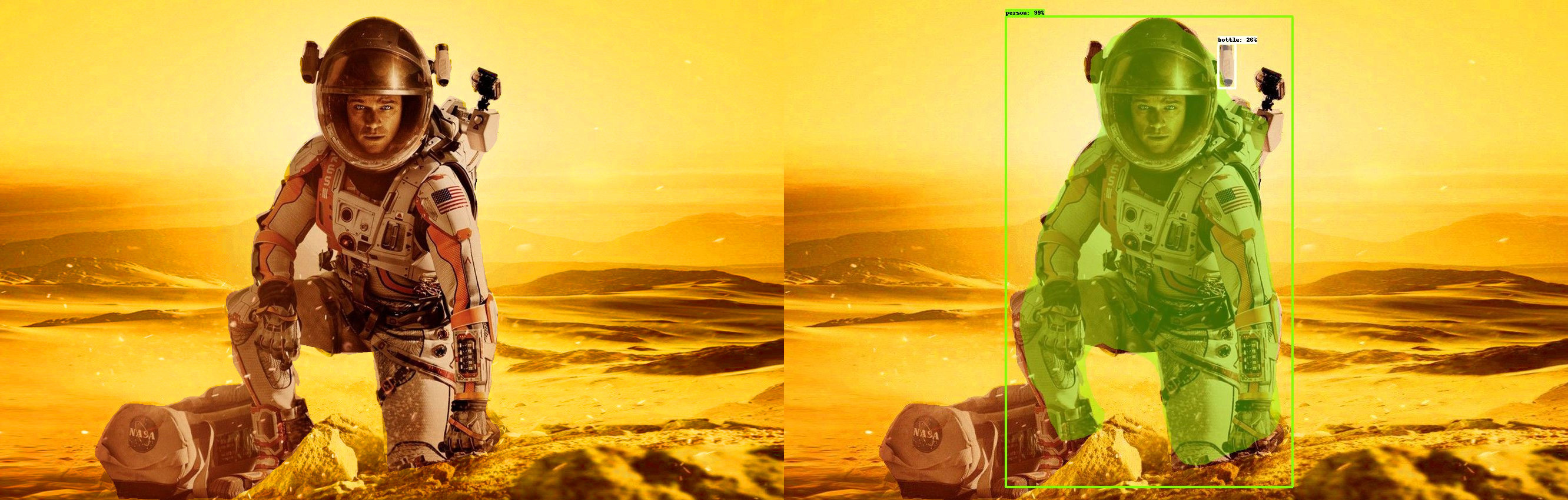

A poster from the movie The Martian

In the Stanford photo the sky color had no effect on the foreground segmentation of cars in the parking lot. To make sure this is not just due to the good separation of the sky and the cars in the photo, I looked at more challenging situations where the object to be segmented is surrounded by the sky directly. I took the poster for the movie The Martian where an astronaut is crouching, surrounded by the orange Martian sky (and the orange ground at the bottom). The original photo and my segmentation of it is shown here:

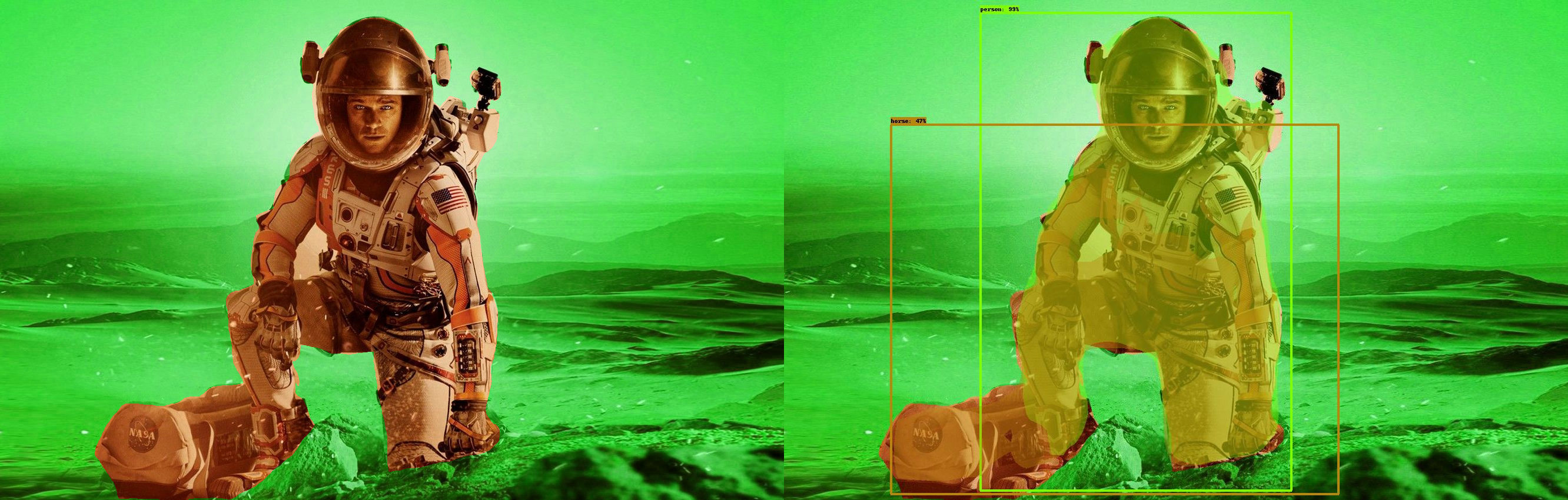

I cropped out the astronaut by hand, and recolored the sky behind him green, purple, blue and yellow. The green background has no effect on the very confident detection of the astronaut, and it doesn’t seem to affect the shape of the segmented polygon either. The question of whether a green background would completely confuse modern computer vision systems is therefore answered in the negative: they seem very robust to such out-of-distribution sky colors.

Interestingly, a new object, a horse, seems to be detected in a part of the astronaut and his bag, albeit with only 47% probability (compared to 99% for the astronaut himself). This is potentially due to the brownish color of the astronaut and the green ground that might look like a grassy field to the network. Notice that, however, this still led to only a 47% horse detection, compared to the near perfect segmentation of the human.

For the purple sky, the segmentation is as good as the original Martian sky:

For the blue sky, which should technically be in distribution for the training set, we again get a confident and precise detection of the human with 99% probability and the illusory horse, this time at 22%. I would argue that the fact that it appears both in the green and the blue sky photos might suggest that it is the brownish color of the astronaut confusing the network, rather than the grass-like green background in the green photo.

For the yellow background, the human is again segmented perfectly, while there is a new detection of a bottle that is actually the astronaut’s helmet-mounted camera/light.

Overall, the out-of-distribution sky colors had no detrimental effect on the segmentation of the astronaut. The only effect that we saw was a low confidence “horse” appearing as a part of the astronaut and his bag in the blue and green photos, and a segmentation of the astronaut’s camera/light as a “bottle” in the yellow photo. In this setup, the sky was surrounding the astronaut on all sides, unlike in the Stanford photo. Yet his segmentation was completely unaffected, demonstrating that large vision systems do not break with out-of-distribution sky colors nearly as easily as some could expect.

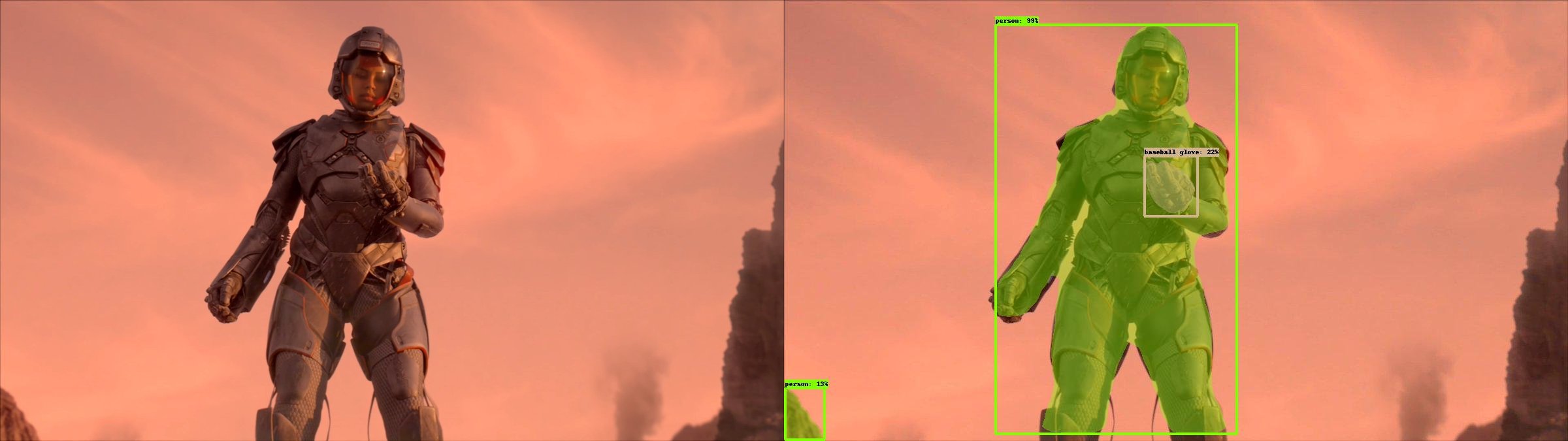

“Natural” scenes from The Expanse

To avoid potential problems with my unprofessional image editing skills, I also looked up a few images from the (really good!) TV series The Expanse (the books are even better). Here’s an image of several Martian marines on the surface of Ganymede (a moon of Jupiter):

The segmentation has no problem discerning the four humans in a very alien environment, even with their “backpacks” – parts of reconnaissance armor.

For a potentially harder case, here’s a single marine on the surface of Mars:

The network not only segmented the human in an exoskeleton perfectly, but also detected her glove correctly (but with low probability).

For a harder image still, here’s a picture of the Mariner Valley from the series’ opening sequence, where the humans are quite small, in a very out-of-distribution environment, and with a orange looking sky and ground:

The network doesn’t have any trouble detecting the four humans and their transport (labeled as “boat” here). It makes a few spurious low-confidence detections in the background, however, the main focus (the humans) are detected perfectly.

Conclusion

In this post I experimentally verify that Mask RCNN Google Colab does not get confused at all by very out-of-distribution sky colors. This goes against some people’s intuition that large vision systems might not be very robust to scenes that were not present in the training set. In my verification I use natural scenes as well as scenes from movies where I crop out the sky and change its color to purple, green, yellow, red and blue. Modern computer vision networks seem more resistant to such out-of-distribution scenes than we might naively expect.

If you find this blog post useful and would like to cite it, please use the following BibTeX:

@misc{

Fort2021greensky,

title={Out-of-distribution sky color and image segmentation},

url={https://stanislavfort.github.io/2021/03/22/green_sky.html},

author={Stanislav Fort},

year={2021},

month={March}

}